I am a

Software Consultant

I am a jack of all trades, and master of at least some. Starting by putting servers into racks, managing the infrastructure, developing a back end, and ending by designing a front end, I do full stack development it its truest sense. But if I had to chose, I would pick the software development and software architecture aspects as the ones I enjoy most.

When I am writing code, I strive to keep it as simple as possible and to express its intent concisely and precisely without useless embellishments.

In my free time, I mainain and contribute some small open source projects. See below for a list of projects.

I was an

Interdisciplinary Researcher

During my academic career I obtained degrees internationally in Cognitive Computer Science, Computational Neuroscience, and a PhD in Systems Design Engineering. This broad background allows me to make sense of and integrate information from many different research areas. During my doctoral studies I built a spiking neural network model of short- and long-term memory that connects neuroscience and psychology. Besides that I made various contributions that advance the field of large-scale neural modelling.

See below for a list of publications.

I am a

Rock Climber

When I started rock climbing in 2011, it quickly became another passion of mine. The sport requires a unique combination of physical fitness, mental focus, and problem solving. I prefer technical routes that tax my analytical problem solving skills.

See my The Crag profile for my ascents.

Projects

-

Nengo

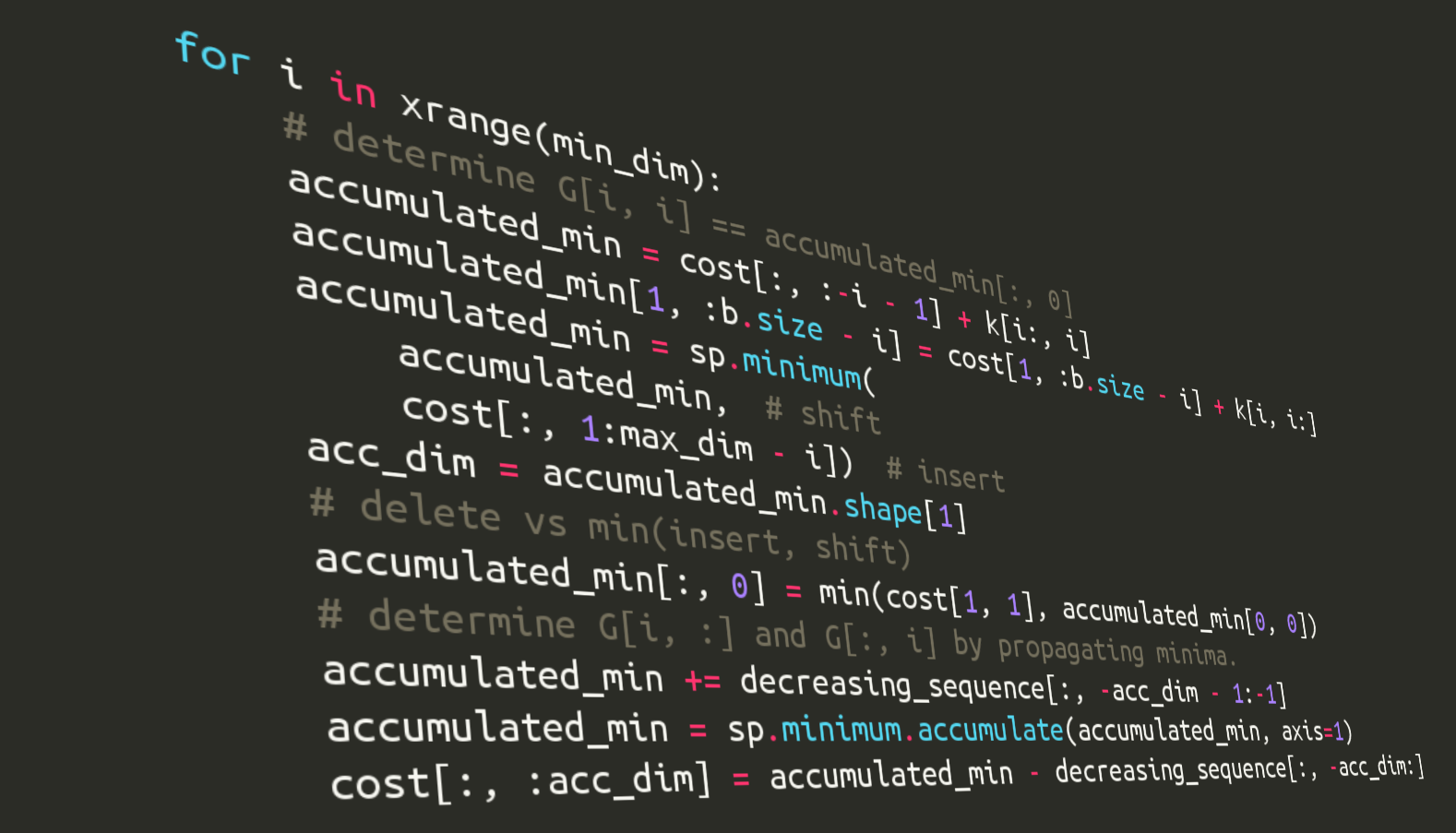

I contributed to various parts of the Nengo neural network simulator, including the core simulator, the web-based GUI, and Nengo SPA, an implementation of the Semantic Pointer Architecture. In particular, I conceived and implemented an optimizer increasing simulation times by almost a factor of 7. I also integrated decoder caching and the progress bar. On the GUI side my main contributions are related to refactoring and improving the websocket and HTTP server of the backend.

-

pylint-venv

A module that provides a Pylint init-hook to have Pylint respect the currently activated virtual environment. This allows to use a single Pylint installation with multiple virtual environments.

-

Neo2 keyboard layout

For the ergonomic Neo2 keyboard layout, I provided an improved keyboard layout file and accompanying Karabiner-Elements rules for macOS. These have now been integrated into the upstream layout.

-

Doveseed

Doveseed is a minimalistic backend service for email subscriptions to RSS feeds written in Python.

-

dmarc-metrics-exporter

Exports metrics derived from DMARC aggregate reports to Prometheus. This exporter regularly polls for new aggregate report emails via IMAP.

-

GopPy

GopPy (Gaussian Online Processes for Python) provides an implementation of Gaussian Processes for Python with support for efficient online updates. At the time of development all existing Python modules required a complete retraining of the Gaussian Process.

-

Spykeutils

I contributed highly optimized implementations of spike train metrics to the spykeutils project, a Python library for analyzing neurophysiological recordings.

Publications

-

- Jan Gosmann

- Chris Eliasmith

CUE: A unified spiking neuron model of short-term and long-term memory

We present the context-unified encoding (CUE) model, a large-scale spiking neural network model of human memory. It combines and integrates activity-based short-term memory with weight-based long-term memory. The implementation with spiking neurons ensures biological plausibility and allows for predictions on the neural level. At the same time, the model produces behavioral outputs that have been matched to human data from serial and free recall experiments. In particular, well-known results such as primacy, recency, transposition error gradients, and forward recall bias have been reproduced with good quantitative matches. Additionally, the model accounts for the Hebb repetition effect. The CUE model combines and extends the ordinal serial encoding (OSE) model, a spiking neuron model of short-term memory, and the temporal context model (TCM), a mathematical memory model matching free recall data. To implement the modification of the required association matrices, a novel learning rule, the association matrix learning rule (AML), is derived that allows for one-shot learning without catastrophic forgetting. Its biological plausibility is discussed and it is shown that it accounts for changes in neural firing observed in human recordings from an association learning experiment.

-

- Jan Gosmann

- Chris Eliasmith

Vector-Derived Transformation Binding: An Improved Binding Operation for Deep Symbol-Like Processing in Neural Networks

We present a new binding operation, vector-derived transformation binding (VTB), for use in vector symbolic architectures (VSA). The performance of VTB is compared to circular convolution, used in holographic reduced representations (HRRs), in terms of list and stack encoding capacity. A special focus is given to the possibility of a neural implementation by the means of the Neural Engineering Framework (NEF). While the scaling of required neural resources is slightly worse for VTB, it is found to be on par with circular convolution for list encoding and better for encoding of stacks. Furthermore, VTB influences the vector length less, which also benefits a neural implementation. Consequently, we argue that VTB is an improvement over HRRs for neurally implemented VSAs.

-

- Jan Gosmann

- Chris Eliasmith

Automatic Optimization of the Computation Graph in the Nengo Neural Network Simulator

One critical factor limiting the size of neural cognitive models is the time required to simulate such models. To reduce simulation time, specialized hardware is often used. However, such hardware can be costly, not readily available, or require specialized software implementations that are difficult to maintain. Here, we present an algorithm that optimizes the computational graph of the Nengo neural network simulator, allowing simulations to run more quickly on commodity hardware. This is achieved by merging identical operations into single operations and restructuring the accessed data in larger blocks of sequential memory. In this way, a time speed-up of up to 6.8 is obtained. While this does not beat the specialized OpenCL implementation of Nengo, this optimization is available on any platform that can run Python. In contrast, the OpenCL implementation supports fewer platforms and can be difficult to install.

-

- Ivana Kajić

- Jan Gosmann

- Terrence C. Stewart

- Thomas Wennekers

- Chris Eliasmith

A Spiking Neuron Model of Word Associations for the Remote Associates Test

Generating associations is important for cognitive tasks including language acquisition and creative problem solving. It remains an open question how the brain represents and processes associations. The Remote Associates Test (RAT) is a task, originally used in creativity research, that is heavily dependent on generating associations in a search for the solutions to individual RAT problems. In this work we present a model that solves the test. Compared to earlier modeling work on the RAT, our hybrid (i.e. non-developmental) model is implemented in a spiking neural network by means of the Neural Engineering Framework (NEF), demonstrating that it is possible for spiking neurons to be organized to store the employed representations and to manipulate them. In particular, the model shows that distributed representations can support sophisticated linguistic processing. The model was validated on human behavioral data including the typical length of response sequences and similarity relationships in produced responses. These data suggest two cognitive processes that are involved in solving the RAT: one process generates potential responses and a second process filters the responses.

-

- Jan Gosmann

- Chris Eliasmith

Optimizing Semantic Pointer Representations for Symbol-Like Processing in Spiking Neural Networks

The Semantic Pointer Architecture (SPA) is a proposal of specifying the computations and architectural elements needed to account for cognitive functions. By means of the Neural Engineering Framework (NEF) this proposal can be realized in a spiking neural network. However, in any such network each SPA transformation will accumulate noise. By increasing the accuracy of common SPA operations, the overall network performance can be increased considerably. As well, the representations in such networks present a trade-off between being able to represent all possible values and being only able to represent the most likely values, but with high accuracy. We derive a heuristic to find the near-optimal point in this trade-off. This allows us to improve the accuracy of common SPA operations by up to 25 times. Ultimately, it allows for a reduction of neuron number and a more efficient use of both traditional and neuromorphic hardware, which we demonstrate here.